AI + Machine Learning, Azure DevOps, Azure Machine Learning, DevOps

Take your machine learning models to production with new MLOps capabilities

Posted on

3 min read

This blog post was authored by Jordan Edwards, Senior Program Manager, Microsoft Azure.

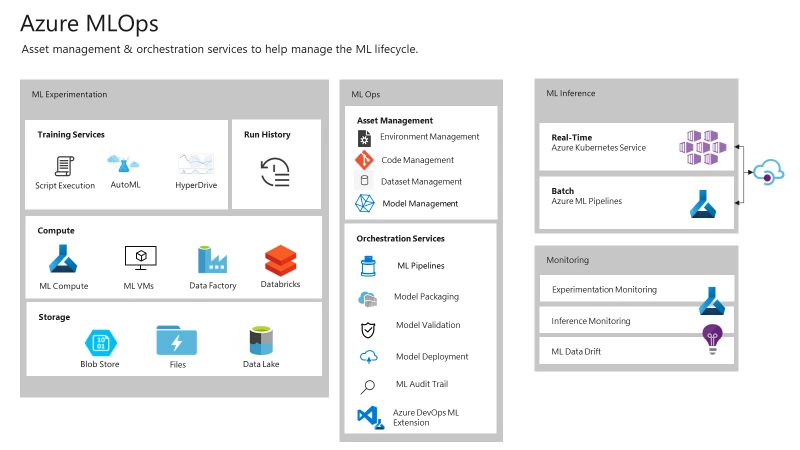

At Microsoft Build 2019 we announced MLOps capabilities in Azure Machine Learning service. MLOps, also known as DevOps for machine learning, is the practice of collaboration and communication between data scientists and DevOps professionals to help manage the production of the machine learning (ML) lifecycle.

Azure Machine Learning service’s MLOps capabilities provide customers with asset management and orchestration services, enabling effective ML lifecycle management. With this announcement, Azure is reaffirming its commitment to help customers safely bring their machine learning models to production and solve their business’s key problems faster and more accurately than ever before.

Here is a quick look at some of the new features:

Azure Machine Learning Command Line Interface (CLI)

Azure Machine Learning’s management plane has historically been via the Python SDK. With the new Azure Machine Learning CLI, you can easily perform a variety of automated tasks against the ML workspace including:

-

Compute target management

-

Experiment submission

-

Model registration and deployment

Management capabilities

Azure Machine Learning service introduced new capabilities to help manage the code, data, and environments used in your ML lifecycle.

Code management

Git repositories are commonly used in industry for source control management and as key assets in the software development lifecycle. We are including our first version of Git repository tracking – any time you submit code artifacts to Azure Machine Learning service, you can specify a Git repository reference. This is done automatically when you are running from a CI/CD solution such as Azure Pipelines.

Data set management

With Azure Machine Learning data sets you can version, profile, and snapshot your data to enable you to reproduce your training process by having access to the same data. You can also compare data set profiles and determine how much your data has changed or if you need to retrain your model.

Environment management

Azure Machine Learning Environments are shared across Azure Machine Learning scenarios, from data preparation to model training to inferencing. Shared environments help to simplify handoff from training to inferencing as well as the ability to reproduce a training environment locally.

Environments provide automatic Docker image management (and caching!), plus tracking to streamline reproducibility.

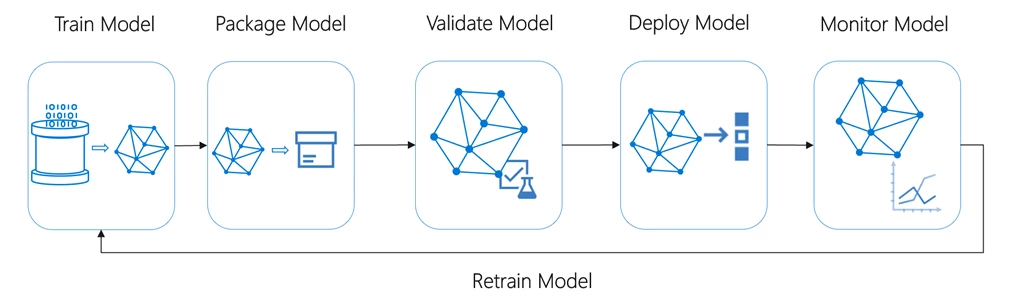

Simplified model debugging and deployment

Some data scientists have difficulty getting an ML model prepared to run in a production system. To alleviate this, we have introduced new capabilities to help you package and debug your ML models locally, prior to pushing them to the cloud. This should greatly reduce the inner loop time required to iterate and arrive at a satisfactory inferencing service, prior to the packaged model reaching the datacenter.

Model validation and profiling

Another challenge that data scientists commonly face is guaranteeing that models will perform as expected once they are deployed to the cloud or the edge. With the new model validation and profiling capabilities, you can provide sample input queries to your model. We will automatically deploy and test the packaged model on a variety of inference CPU/memory configurations to determine the optimal performance profile. We also check that the inference service is responding correctly to these types of queries.

Model interpretability

Data scientists want to know why models predict in a specific manner. With the new model interpretability capabilities, we can explain why a model is behaving a certain way during both training and inferencing.

ML audit trail

Azure Machine Learning is used for managing all of the artifacts in your model training and deployment process. With new audit trail capabilities, we are enabling automatic tracking of the experiments and datasets that corresponds to your registered ML model. This helps to answer the question, “What code/data was used to create this model?”

Azure DevOps extension for machine learning

Azure DevOps provides commonly used tools data scientists leverage to manage code, work items, and CI/CD pipelines. With the Azure DevOps extension for machine learning, we are introducing new capabilities to make it easy to manage your ML CI/CD pipelines with the same tools you use for software development processes. The extension includes the abilities to trigger Azure Pipelines release on model registration, easily connect an Azure Machine Learning Workspace to an Azure DevOps project, and perform a series of tasks designed to help interaction with Azure Machine Learning as easy as possible from the existing automation tooling.

Get started today

These new MLOps features in the Azure Machine Learning service aim to enable users to bring their ML scenarios to production by supporting reproducibility, auditability, and automation of the end-to-end ML lifecycle. We’ll be publishing more blogs that go in-depth with these features in the following weeks, so follow along for the latest updates and releases.

- Learn more about Azure Machine Learning service

- Get started today with a free trial