It’s exciting to see the Pytorch Community continue to grow and regularly release updated versions of PyTorch! Recent releases improve performance, ONNX export, TorchScript, C++ frontend, JIT, and distributed training. Several new experimental features, such as quantization, have also been introduced.

At the PyTorch Developer Conference earlier this fall, we presented how our open source contributions to PyTorch make it better for everyone in the community. We also talked about how Microsoft uses PyTorch to develop machine learning models for services like Bing. Whether you are an individual, a small team, or a large enterprise, managing the machine learning lifecycle can be challenging. We’d like to show you how Azure Machine Learning can make you and your organization more productive with PyTorch.

Streamlining the research to production lifecycle with Azure Machine Learning

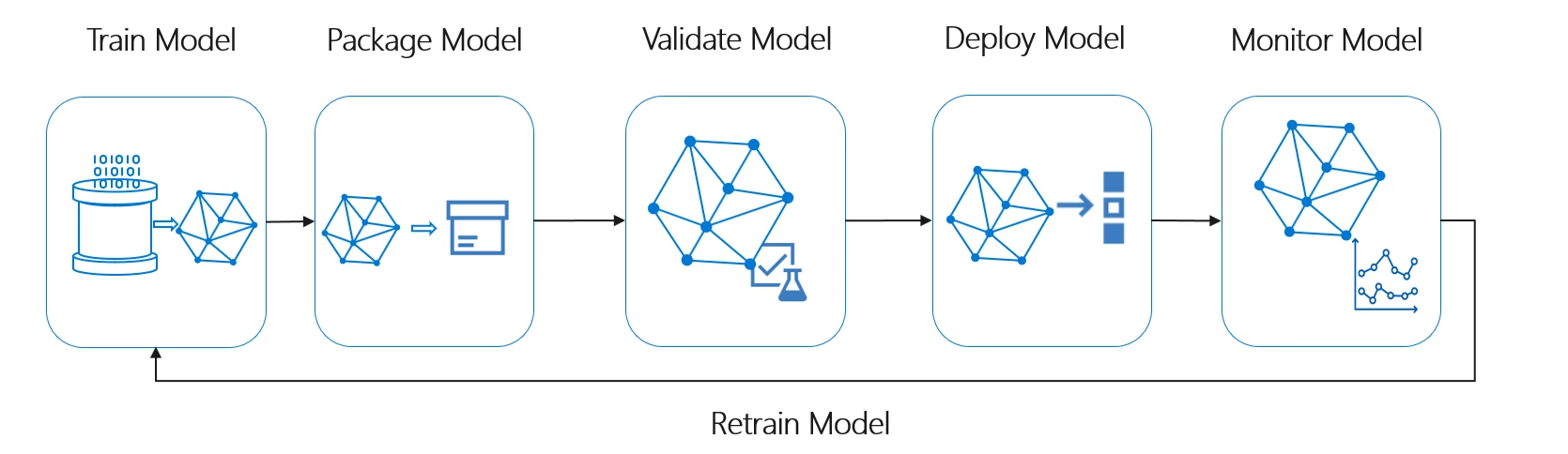

One of the benefits of using PyTorch 1.3 in Azure Machine Learning is Machine Learning Operations (MLOps). MLOps streamlines the end-to-end machine learning (ML) lifecycle so you can frequently update models, test new models, and continuously roll out new ML models alongside your other applications and services. MLOps provides:

- Reproducible training with powerful ML pipelines that stitch together all the steps involved in training your PyTorch model, from data preparation, to feature extraction, to hyperparameter tuning, to model evaluation.

- Asset tracking with dataset and model registries so you know who is publishing PyTorch models, why changes are being made, and when your PyTorch models were deployed or used in production.

- Packaging, profiling, validation, and deployment of PyTorch models anywhere from the cloud to the edge.

- Monitoring and management of your PyTorch models at scale in an enterprise-ready fashion with eventing and notification of business impacting issues like data drift.

Training PyTorch Models

With MLOps, data scientists write and update their code as usual and regularly push it to a GitHub repository. This triggers an Azure DevOps build pipeline that performs code quality checks, data sanity tests, unit tests, builds an Azure Machine Learning pipeline, and publishes it to your Azure Machine Learning workspace.

The Azure Machine Learning pipeline does the following tasks:

- Train model task executes the PyTorch training script on Azure Machine Learning compute. It outputs a model file which is stored in the run history.

- Evaluate model task evaluates the performance of the newly trained PyTorch model with the model in production. If the new model performs better than the production model, the following steps are executed. If not, they will be skipped.

- Register model task takes the improved PyTorch model and registers it with the Azure Machine Learning model registry. This allows us to version control it.

You can find example code for training a PyTorch model, doing hyperparameter sweeps, and registering the model in this PyTorch MLOps example.

Deploying PyTorch models

The Machine Learning extension for DevOps helps you integrate Azure Machine Learning tasks in your Azure DevOps project to simplify and automate model deployments. Once a new model is registered in your Azure Machine Learning workspace, you can trigger a release pipeline to automate your deployment process. Models can then be automatically packaged and deployed as a web service across test and production environments such as Azure Container Instances and Azure Kubernetes Service (AKS). You can even enable gated releases so that, once the model is successfully deployed to the staging or quality assurance (QA) environment, a notification is sent to approvers to review and approve the release to production. You can see sample code for this in the PyTorch ML Ops example.

Next steps

We’re excited to support the latest version of PyTorch in Azure. With Azure Machine Learning and its MLOps capabilities, you can use PyTorch in your enterprise with a reproducible model lifecycle. Check out the MLOps example repository for an end to end example of how to enable a CI/CD workflow for PyTorch models.