We have heard from many customers about their challenges with detecting highly evasive threats. To help provide guidance, we published Windows DNS server logging for network forensics and the introduction of the Azure DNS Analytics solution. Today, we are discussing some of our more complex, heuristic techniques to detect malicious use of this vital protocol and how these detect key components of common real-world attacks.

These analytics focus on behavior that is common to a variety of attacks, ranging from advanced targeted intrusions to the more mundane worms, botnets and ransomware. Such techniques are designed to complement more concrete signature-based detection, giving the opportunity to identify such behavior prior to the deployment of analyst driven rules. This is especially important in the case of targeted attacks, where time to detection of such activity is typically measured in months. The longer an attacker has access to a network, the more expensive the eventual clean-up and removal process becomes. Similarly, while rule-based detection of ransomware is normally available within a few days of an outbreak, this is often too late to avoid significant brand and financial damage for many organizations.

These analytics, along with many more, are enabled through Azure Security Center upon enabling the collection of DNS logs on Azure based servers. While this logging requires Windows DNS servers, the detections themselves are largely platform agnostic, so they can run across any client operating system configured to use an enabled server.

A typical attack scenario

A bad guy seeking to gain access to a cloud server starts a script attempting to log in by brute force guessing of the local administrator password. With no limit to the number of incorrect login attempts, following several days of effort the attacker eventually correctly guesses the perceived strong password of St@1w@rt.

Upon successful login, the intruder immediately proceeds to download and install a malicious remote administration tool. This enables a raft of useful functions, such as the automated stealing of user passwords, detection of credit card or banking details, and assistance in subsequent brute force or Denial-of-Service attacks. Once running, this tool begins periodically beaconing over HTTP to a pre-configured command and control server, awaiting further instruction.

This type of attack, while seemingly trivial to detect, is not always easy to prevent. For instance, limiting incorrect login attempts appears to be a sensible precaution, but doing so introduces a severe risk of denial of service through lockouts. Likewise, although it is simple to detect large numbers of failed logins, it is not always easy to differentiate legitimate user activity from the almost continual background noise of often distributed brute force attempts.

Detection opportunities

For many of our analytics, we are not specifically looking for the initial infection vector. While our above example could potentially have been detected from its brute force activity, in practice, this could just as easily have been a single malicious login using a known password, as might be the case following exploitation of a legitimate administrator’s desktop or successful social engineering effort. The following techniques are therefore looking to detect the subsequent behavior or the downloading and running of the malicious service.

Network artifacts

Attacks, such as the one outlined above, have many possible avenues of detection over the network, but a consistent feature of almost all attacks is their usage of DNS. Regardless of transport protocol used, the odds are that a given server will be contacted by its domain name. This necessitates usage of DNS to resolve this hostname to an IP address. Therefore, by analyzing only DNS interactions, you get a useful view of outbound communication channels from a given network. An additional benefit to running analytics over DNS, rather than the underlying protocols, is local caching of common domains. This reduces their prevalence on the network, reducing both storage and computational expense of any analytic framework.

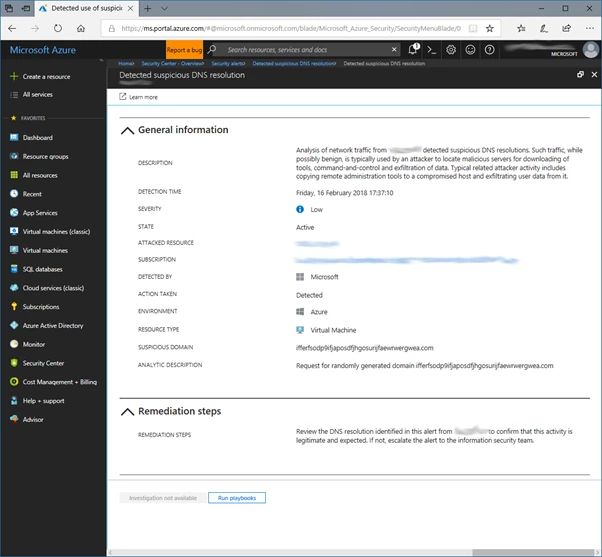

WannaCry Ransomware detected by Random Domain analytic.

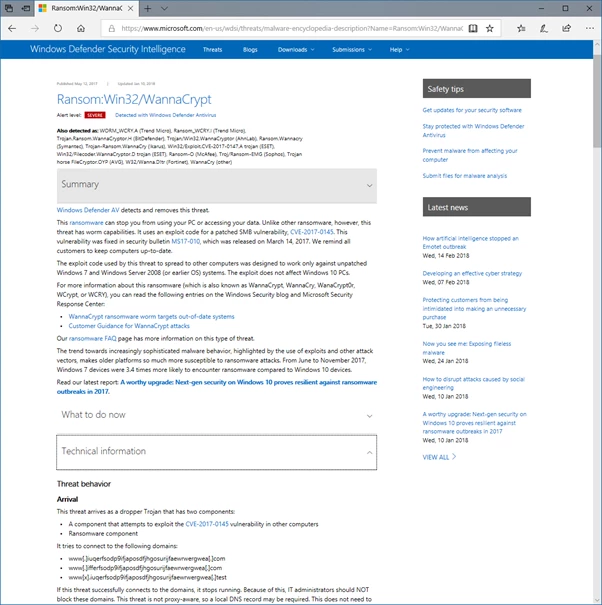

Malware report listing hard-coded domains enumerated by WannaCry ransomware.

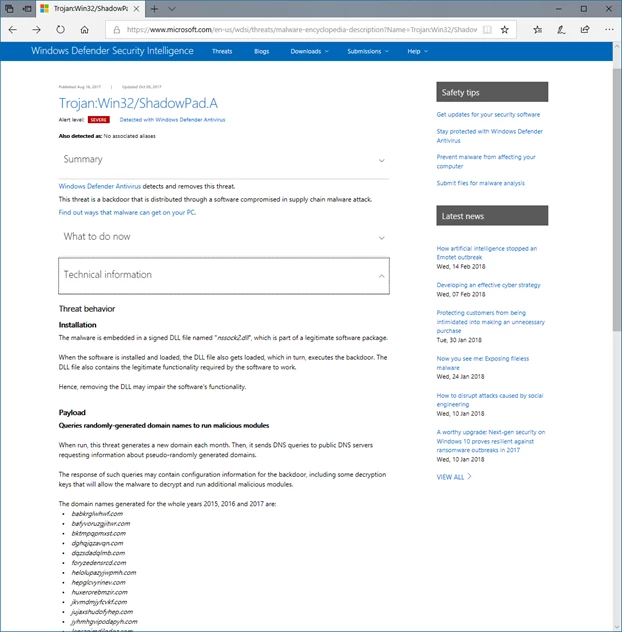

Random domains

Malicious software has a tendency towards randomly generated domains. This may be for many reasons, ranging from simple language issues, avoiding the need to tailor domains to each victim’s native language. To even assisting in the automation of the registration of large numbers of such names, along with helping reduce the chances of accidental reuse or collision. This is highlighted by techniques such as Domain Generation Algorithms (DGAs) but is frequently used in static download sites and command and control servers, such as in the above WannaCry example.

Detecting these “random” names is not always straightforward. Standard tests tend to only work on relatively large amounts of data. Entropy, for instance, requires a minimum of several times the size of the character set or at least hundreds of bytes. Domain names, on the other hand, are a maximum of 63 characters in length. To address this issue, we have used basic language modelling, calculating the probabilities of various n-grams occurring in legitimate domain names. We also use these to detect the occurrence of highly unlikely combinations of characters in a given name.

Malware report detailing use of randomly generated domain names by ShadowPad trojan.

Periodicity

As mentioned, this attack involved the periodic beaconing of a command and control server. For the sake of argument, let’s assume this is an hourly HTTP request. When attempting to make this request, the HTTP client will first attempt to resolve the server’s domain name through the local DNS resolver. This resolver will tend to keep some local cache of such resolutions, meaning that you cannot guarantee you will see a DNS request on every beacon. However, you can see these on some multiple of an hour.

In attempting to find such periodic activity, we use a version of Euclid’s algorithm to keep track of an approximate greatest common divisor of the time between lookups of each specific domain. Once a domain’s GCD falls within the permitted error (i.e. in the exact case to one), it is added to a bloom filter of domains to be ignored from further calculations. Assuming a GCD greater than this error, we take the current GCD or estimate of the beacon period and the number of observations to calculate the probability of observing this many concurrent lookups on multiples of this period. I.e. the chances of randomly seeing three concurrent lookups to some domain, all on multiples of two seconds is 1/2^3 or 1 in 8. On the other hand, as with our example, the probability of seeing three random lookups, precisely to the nearest second on multiples of one hour is 1/〖3600〗^3 or 1 in 46,656,000,000. Thus, the longer the time delta, the fewer observations we need to observe before we are certain it is periodic.

Conclusion

As demonstrated in the above scenario, analyzing network artifacts can be extremely useful in detecting malicious activity on endpoints. While the ideal situation is the analysis of all protocols from every machine on a network, in practice, this is too expensive to collect and process. Choosing a single protocol to give the highest chance of detecting malicious communications while minimizing the volume of data collected results in a choice between HTTP and DNS. By choosing DNS, you lose the ability to detect direct IP connections. In practice, these are rare, due to the relative scarcity of static IP addresses, alongside the potential to block such connections at firewalls. The benefits of examining DNS is its ability to observe connections across all possible network protocols from all client operating systems in a relatively small dataset. The compactness of this data is further aided by the default behavior of on-host caching of common domains.