The Open Compute Project (OCP) Global Summit 2019 kicks off today in San Jose where a vibrant and growing community is sharing the latest in innovation to make hardware more efficient, flexible, and scalable.

For Microsoft, our journey with OCP began in 2014 when we joined the foundation and contributed the very same server and datacenter designs that power our global Azure cloud, but it didn’t stop there. Each year at the OCP summit, we contribute innovation that addresses the most pressing challenges for our industry, including a modular and globally compatible server design and universal motherboard with Project Olympus to enabling hardware security with Project Cerberus to a next generation specification for SSD storage with Project Denali.

This year we’re turning our attention to the exploding volume of data being created daily. Data is at the heart of digital transformation and companies are leveraging data to improve customer experiences, open new markets, make employees and processes more productive, and create new sources of competitive advantage as they work toward the future of tomorrow.

Data – the engine of Digital Transformation

The Global Datasphere* which quantifies and analyzes the amount of data created, captured, and replicated in any given year across the world is growing exponentially and the growth is seemingly never-ending. IDC predicts* that the Global Datasphere will grow from 33 zettabytes (ZB) in 2018 to 175 ZB by 2025. To keep up with the storage demands stemming from all this data creation, IDC forecasts* that over 22 ZB o storage capacity must ship across all media types from 2018 to 2025, with nearly 59 percent of that capacity supplied from the HDD industry.

With this challenge on the horizon, the enterprise is fast becoming the world’s data steward once again. In the recent past, consumers were responsible for much of their own data, but their reliance on and trust of today’s cloud services, especially from connectivity, performance, and convenience perspectives, continues to increase and the desire to store and manage data locally continues to decrease.

Moreover, businesses are looking to centralize data management and delivery (e.g., online video streaming, data analytics, data security, and privacy) as well as to leverage data to control their businesses and the user experience (e.g., machine-to-machine communication, IoT, and persistent personalization profiling). The responsibility to maintain and manage all this consumer and business data is driving the growth of cloud provider datacenters. As a result, the enterprise’s role as a data steward continues to grow, and consumers are not just allowing this, but expecting it. Beginning in 2019, more data will be stored in the enterprise core than in all the world’s existing endpoints.

The demand for data storage

A few years ago, we started looking at scale challenges in the cloud regarding the growth of data and the future of data storage needs. The amount of data created in the Global Datasphere is the focus of the storage industry. Even with the amount of data that is discarded, overwritten, or sensed and never stored longer than milliseconds, there still exists a growing demand for storage capacity across industries, governments, enterprises, and consumers.

To live in a digitized world where artiï¬cial intelligence drives business processes, customer engagements, and autonomous infrastructure or where consumers’ lives are hyper-personalized in nearly every aspect of behavior – including what time we’ll be awakened based on the previous day’s activities, overnight sleep patterns, and the next day’s calendar – will require creating and storing more data than ever before.

IDC currently calculates Data Age 2025* storage capacity shipments across all media types (HDD, SSD, NVM-flash/other, tape, and optical) over the next 4 years (2018–2021) will need to exceed the 6.9 ZB shipped across all media types over the past 20 years. IDC forecasts* that over 22 ZB of storage capacity must ship across all media types from 2018 to 2025 to keep up with storage demands. Around 59 percent of the capacity will need to come from the HDD industry and 26 percent from flash technology over that same time frame, with optical storage the only medium to show signs of fatigue as consumers continue to abandon DVDs in favor of streaming video and audio.

Introducing Microsoft’s Project Zipline

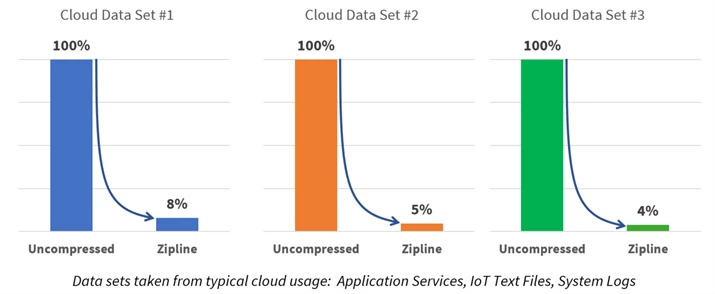

The ability to store and process data extremely efficiently is core to the cloud’s value proposition and Azure continues to grow dramatically as does the amount of data that Azure stores with many very data-intensive workloads. To address this, we’ve developed a cutting-edge compression algorithm and optimized the hardware implementation for the types of data we see in our cloud storage workloads. By engineering innovation at the systems level, we’ve been able to simultaneously achieve higher compression ratios, higher throughput, and lower latency than the other algorithms that are currently available. This enables compression without compromise, allowing always-on data processing for various industry usage models ranging from the cloud to the edge.

Microsoft’s Project Zipline compression algorithm yields dramatically better results, up to 2X high compression ratios versus the commonly used Zlib-L4 64KB model. Enhancements like this can lead to direct customer benefits in the potential for cost savings, for instance, and indirectly, access to petabytes or exabytes of capacity in a cost-effective way could enable new scenarios for our customers.

We are open sourcing Project Zipline compression algorithms, hardware design specifications, and Verilog source code for register transfer language (RTL) with initial content available today and more coming soon. This contribution will provide collateral for integration into a variety of silicon components (e.g. edge devices, networking, offload accelerators etc.) across the industry for this new high-performance compression standard. Contributing RTL at this level of detail as open source to OCP is industry leading. It sets a new precedent for driving frictionless collaboration in the OCP ecosystem for new technologies and opening the doors for hardware innovation at the silicon level. Over time, we anticipate Project Zipline compression technology will make its way into several market segments and usage models such as network data processing, smart SSDs, archival systems, cloud appliances, general purpose microprocessor, IoT, and edge devices.

Project Zipline is a cutting-edge compression technology optimized for a large variety of datasets, and our release of RTL allows hardware vendors to use the reference design to produce hardware chips to allow the highest compression, lowest cost, and lowest power out of the algorithm. It’s available to the OCP ecosystem, so they can contribute to it, and create further benefit for the entire ecosystem, including Azure and our customers.

Project Zipline partners and ecosystem

As a leader in the cloud storage space, I’m particularly proud that we’re able to take all the investment and innovation we’ve created and share it through OCP so that our partners can provide better solutions for their customers as well.

I look forward to seeing more of the industry joining OCP and collaborating so their customers can also see the benefit.

You can follow these links to learn more about our open source hardware development or Microsoft’s Project Zipline contribution on GitHub.

** Source: Data Age 2025, sponsored by Seagate with data from IDC Global DataSphere, Nov 2018