In June 2020, we announced the preview of the Live Video Analytics platform—a groundbreaking new set of capabilities in Azure Media Services that allows you to build workflows that capture and process video with real-time analytics from the intelligent edge to intelligent cloud. We continue to see customers across industries enthusiastically using Live Video Analytics on IoT Edge in preview, to drive positive outcomes for their organizations. Last week at Microsoft Ignite, we announced new features, partner integrations, and reference apps that unlock additional scenarios that include social distancing, factory floor safety, security perimeter monitoring, and more. The new product capabilities that enable these scenarios include:

- Spatial Analysis in Azure Computer Vision for Cognitive Services: Enhanced video analytics that factor in the spatial relationships between people and movement in the physical domain.

- Intel OpenVINO Model Server integration: Build complex, highly performant live video analytics solutions powered by OpenVINO toolkit, with optimized pre-trained models running on Intel CPUs (Atom, Core, Xeon), FPGAs, and VPUs.

- NVIDIA DeepStream integration: Support for hardware accelerated hybrid video analytics apps that combine the power of NVIDIA GPUs with Azure services.

- Arm64 support: Develop and deploy live video analytics solutions on low power, low footprint Linux Arm64 devices.

- Azure IoT Central Custom Vision Template: Build rich custom vision applications in as little as a few minutes to a few hours with no coding required.

- High frame rate inferencing with Cognitive Services Custom Vision integration: Demonstrated in a manufacturing industry reference app that supports six useful out of the box scenarios for factory environments.

Making video AI easier to use

Given the wide array of available CPU architectures (x86-64, Arm, and more) and hardware acceleration options (Intel Movidius VPU, iGPU, FPGA, NVIDIA GPU), plus the dearth of data science professionals to build customized AI, putting together a traditional video analytics solution entails significant time, effort and complexity.

The announcements we’re making today further our mission of making video analytics more accessible and useful for everyone—with support for widely used chip architectures, including Intel, NVIDIA and Arm, integration with hardware optimized AI frameworks like NVIDIA DeepStream and Intel OpenVINO, closer integration with complementary technologies across Microsoft’s AI ecosystem—Computer Vision for Spatial Analysis and Cognitive Services Custom Vision, as well as an improved development experience via the Azure IoT Central Custom Vision template and a manufacturing floor reference application.

Live video analytics with Computer Vision for Spatial Analysis

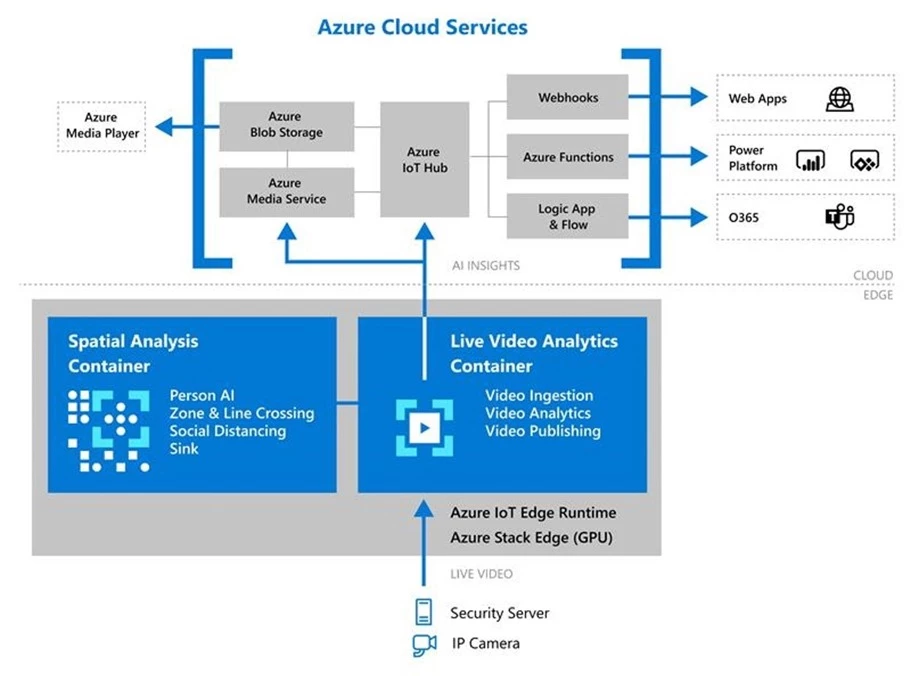

The Spatial Analysis capability of Computer vision, a part of Azure Cognitive Service, can be used in conjunction with Live Video Analytics on IoT Edge to better understand the spatial relationships between people and movement in physical environments. We’ve added new operations that enable you to count people in a designated zone within the camera’s field of view, to track when a person crosses a designated line or area, or when people violate a distance rule.

The Live Video Analytics module will capture live video from real-time streaming protocol (RTSP) cameras and invoke the spatial analysis module for AI processing. These modules can be configured to enable video analysis and the recording of clips locally or to Azure Blob storage.

Deploying the Live Video Analytics and the Spatial Analysis modules on edge devices is made easier by Azure IoT Hub. Our recommended edge device is Azure Stack Edge with the NVIDIA T4 Tensor Core GPU. You can learn more about how to analyze live video with Computer Vision for Spatial Analysis in our documentation.

Live Video Analytics with Intel’s OpenVINO Model Server

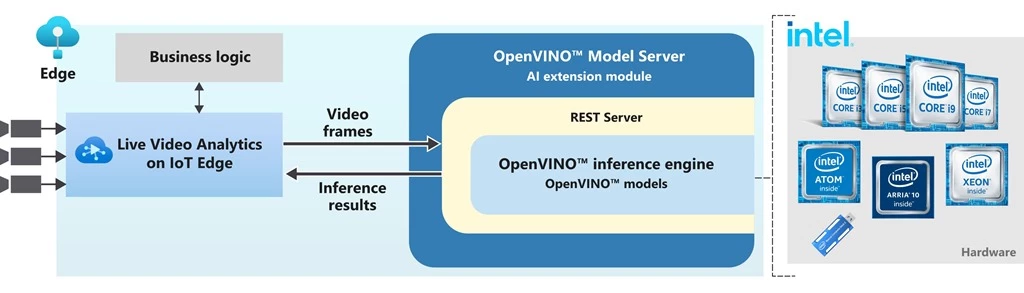

You can pair the Live Video Analytics on IoT Edge module with the OpenVINO Model Server(OVMS) – AI Extension from Intel to build complex, highly performant live video analytics solutions. OpenVINO Model Server is an inference server powered by the OpenVINO toolkit that’s highly optimized for computer vision workloads running on Intel. As an extension, HTTP support and samples have been added to OVMS to facilitate the easy exchange of video frames and inference results between the inference server and the Live Video Analytics module, empowering you to run any object detection, classification or segmentation models supported by OpenVINO toolkit.

You can customize the inference server module to use any optimized pre-trained models in the Open Model Zoo repository, and select from a wide variety of acceleration mechanisms supported by Intel hardware without having to change your application, including CPUs (Atom, Core, Xeon), field programmable gate arrays (FPGAs), and vision processing units (VPUs) that best suit your use case. In addition, you can select from a wide variety of use case-specific Intel-based solutions such as Developer Kits or Market Ready Solutions and incorporate easily pluggable Live Video Analytics platform for scale.

“We are delighted to unleash the power of AI at the edge by extending OpenVINO Model Server for Azure Live Video Analytics. This extension will simplify the process of developing complex video solutions through a modular analytics platform. Developers are empowered to quickly build their edge to cloud applications once and deploy to Intel’s broad range of compute and AI accelerator platforms through our rich ecosystems.”—Adam Burns, VP, Edge AI Developer Tools, Internet of Things Group, Intel

Live Video Analytics with NVIDIA’s DeepStream SDK

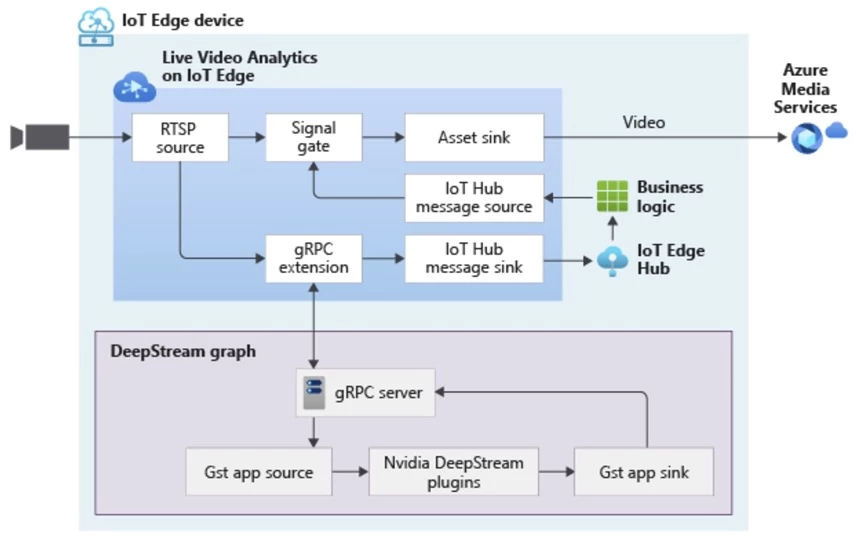

Live Video Analytics and NVIDIA DeepStream SDK can be used to build hardware-accelerated AI video analytics apps that combine the power of NVIDIA graphic processing units (GPUs) with Azure cloud services, such as Azure Media Services, Azure Storage, Azure IoT, and more. You can build sophisticated real-time apps that can scale across thousands of locations and can manage the video workflows on the edge devices at those locations via the cloud. You can explore some related samples on GitHub.

You can use Live Video Analytics to build video workflows that span the edge and cloud, and then combine DeepStream SDK to build pipelines to extract insights from video using the AI of your choice.

The diagram above illustrates how you can record video clips that are triggered by AI events to Azure Media Services in the cloud. The samples are a testament to robust design and openness of both platforms.

“The powerful combination of NVIDIA DeepStream SDK and Live Video Analytics powered by the NVIDIA computing stack helps accelerate the development and deployment of world-class video analytics. Our partnership with Microsoft will advance adoption of AI-enabled video analytics from edge to cloud across all industries and use cases.”—Deepu Talla, Vice President and General Manager of Edge Computing, NVIDIA

Live Video Analytics now runs on Arm

You can now run Live Video Analytics on IoT Edge on Linux Arm64v8 devices, enabling you to use low power-consumption, low-footprint devices such as the NVIDIA® Jetson™ series.

Develop Solutions Rapidly Using the IoT Central Video Analytics Template

The new IoT Central video analytics template simplifies the setup of an Azure IoT Edge device to act as a gateway between cameras and Azure cloud services. It integrates the Azure Live Video analytics video inferencing pipeline and OpenVINO Model Server—an AI Inference server by Intel, enabling customers to build a fully working end-to-end solution in a couple of hours with no code. It’s fully integrated with the Azure Media Services pipeline to capture, record, and play analyzed videos from the cloud.

The template installs IoT Edge modules such as an IoT Central Gateway, Live Video Analytics on IoT Edge, Intel OpenVINO Model server, and an ONVIF module on your edge devices. These modules help the IoT Central application configure and manage the devices, ingest live video streams from the cameras, and easily apply AI models such as vehicle or person detection. Simultaneously in the cloud, Azure Media Services and Azure Storage record and stream relevant portions of the live video feed. Refer to our IoT Show episode and related blog post for a full overview and guidance on how to get started.

Integration of Cognitive Services Custom Vision models in Live Video Analytics

Many organizations already have a large number of cameras deployed to capture video data but are not conducting any meaningful analysis on the streams. With the advent of Live Video Analytics, applying even basic image classification and object detection algorithms to live video feeds can help unlock truly useful insights and make businesses safer, more secure, more efficient, and ultimately more profitable. Potential scenarios include:

- Detecting if employees in an industrial/manufacturing plant are wearing hard hats to ensure their safety and compliance with local regulations.

- Counting products or detecting defective products on a conveyer belt.

- Detecting the presence of unwanted objects (people, vehicles, and more) on-premises and notifying security.

- Detecting low and out of stock products on retail store shelves or on factory parts shelves.

Developing AI models from scratch to perform tasks like these and deploying them at scale to work on live video streams on the edge entails a non-trivial amount of work. Doing it in a scalable and reliable way is even harder and more expensive. The integration of Live Video Analytics on IoT Edge with Cognitive Services Custom Vision makes it possible to implement working solutions for all of these scenarios in a matter of minutes to a few hours.

You begin by first building and training a computer vision model by uploading pre-labeled images to the Custom Vision service. This doesn’t require you to have any prior knowledge of data science, machine learning, or AI. Then, you can use Live Video Analytics to deploy the trained custom model as a container on the edge and analyze multiple camera streams in a cost-effective manner.

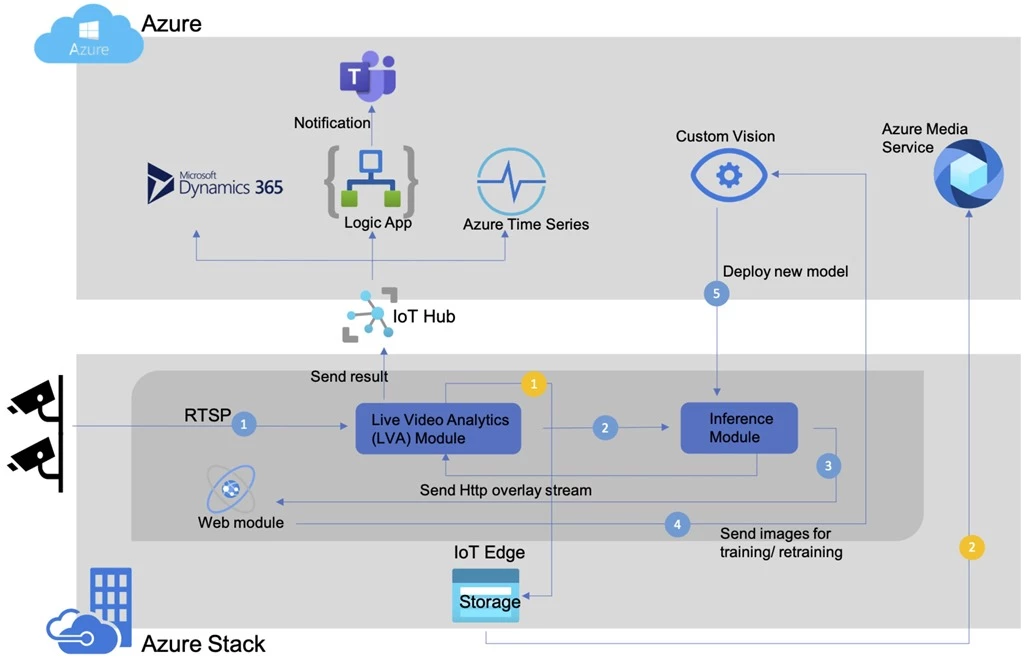

Live Video Analytics powered manufacturing floor reference app

We have partnered with the Azure Stack team to evolve the Factory.AI solution, a turn-key application that makes it easy to train and deploy vision models without the need for data science knowledge. The solution includes capabilities for object counting, employee safety, defect detection, machine misalignment, tool detection, and part confirmation. All these scenarios are powered by the integration of Live Video Analytics running on Azure Stack Edge devices.

In addition, the Factory.AI solution also allows customers to train and deploy their own custom ONNX models using Custom Vision SDK. Once a custom model is deployed on the edge, the reference app leverages gRPC from Live Video Analytics for high frame rate accurate inferencing. You can learn more about the manufacturing reference app at Microsoft Ignite or by visiting the Azure intelligent edge patterns page.

Get started today

In closing, we’d like to thank everyone who is already participating in the Live Video Analytics on IoT Edge preview. We appreciate your ongoing feedback to our engineering team as we work together to fuel your success with video analytics both in the cloud and on the edge. For those of you who are new to our technology, we’d encourage you to get started today with these helpful resources:

- Watch the Live Video Analytics introduction video.

- Find more information on the product details page.

- Watch the Live Video Analytics demo.

- Try the new Live Video Analytics features today with an Azure free trial account.

- Register on the Media Services Tech Community and hear directly from the Engineering team on upcoming new features, to provide feedback and discuss roadmap requests.

- Download Live Video Analytics on IoT Edge from the Azure Marketplace.

- Get started quickly with our C# and Python code samples.

- Review our product documentation.

- Search the GitHub repo for Live Video Analytics open source projects.

- Contact amshelp@microsoft.com for questions.

Intel, the Intel logo, Atom, Core, Xeon, and OpenVINO are registered trademarks of Intel Corporation or its subsidiaries.

NVIDIA and the NVIDIA logo are registered trademarks or trademarks of NVIDIA Corporation in the U.S. and/or other countries. Other company and product names may be trademarks of the respective companies with which they are associated.